Alexa Prize SimBot Challenge

Participated in the EMMA Finalist Team

I was part of the Heriot-Watt University Team for the Alexa Prize Simbot Challenge. We were one of the finalist teams. ![]()

About the challenge

The SimBot Challenge is a voice-controlled game set in the simulated environment of Alexa Arena. The objective of the game is to complete various missions by directing a virtual agent. As players cannot directly interact with the environment, the agent executes their instructions while also communicating to offer suggestions or ask for clarifications. During the competition, real users got to play the game on Alexa Echo Show devices and rate the bots based on their overall experience.

Our approach

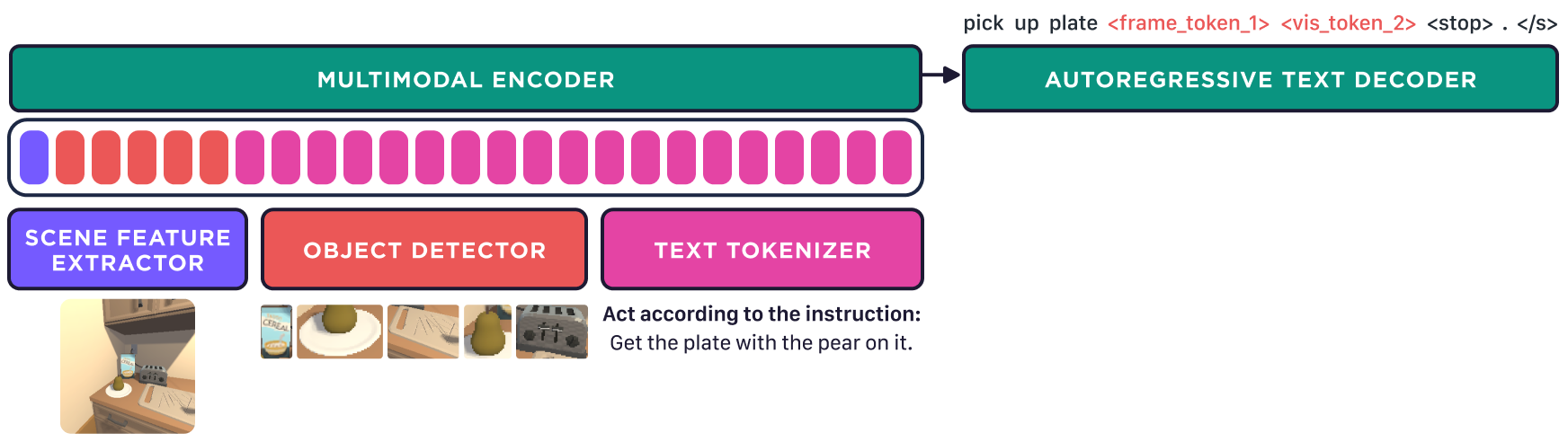

Our approach is based on developing the EMMA model with the following architecture:

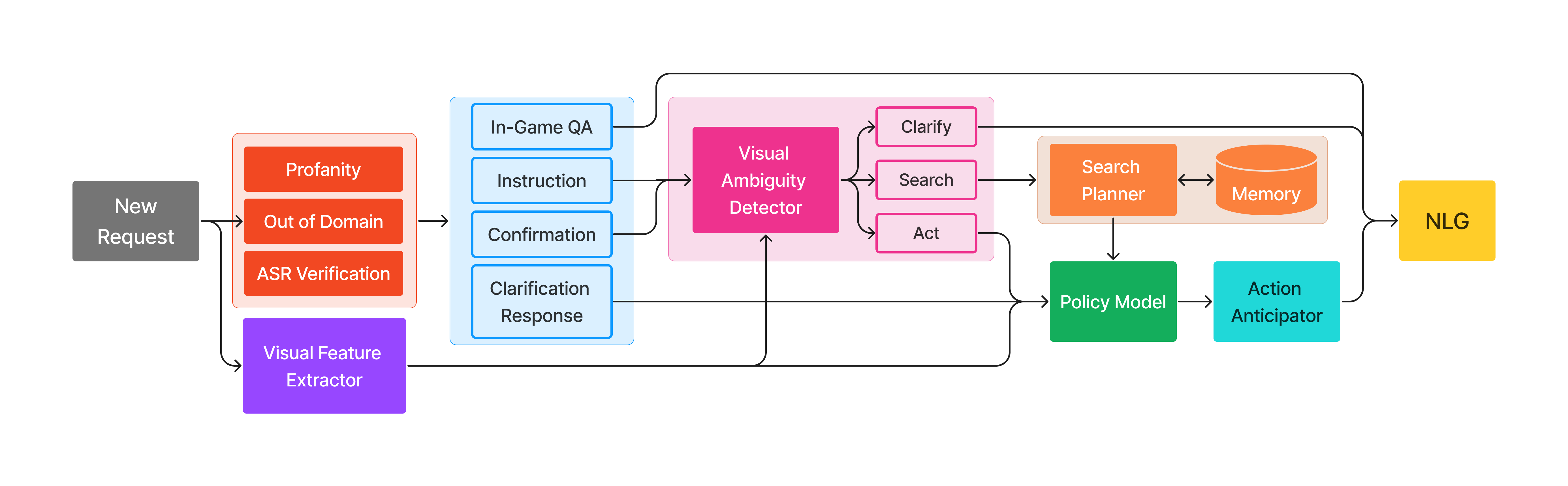

The model is pretrained on multiple vision and language tasks in order to obtain language grounding capabilities. The downstream instruction following task is decomposed into Visual Ambiguity Detection and Policy in order to decouple clarification requests from action prediction.

To ensure the robustness of our system when interacting with real users, we developed additional functionalities through separate modules. These include out-of-domain detection to catch adversarial or out-of-scope user utterances, in-game QA to answer frequent questions about the game or the agent, and a symbolic search and memory module to efficiently locate objects in the environment. Check our paper for more details!

Takeaways

- We developed an encoder-decoder model that encodes both images and videos (i.e., sequences of frames), and it is able to generate natural language tokens conditioned on specific task prompts.

- We observed strong generalization performance to unseen missions, as well as zero-shot transfer from the Alexa Arena to real-world scenes.

- One limitation of our system is that it was trained with the expectations of users providing concrete instructions. As a result, it sometimes struggles when users instead provide high-level mission goals.

- After the challenge, we focused on the core EMMA component showing further benefits from joint finetuning across the downstream embodied tasks. This work has been accepted as a paper at EMNLP 2023.

References

2023

- EMMA: A Foundation Model for Embodied, Interactive, Multimodal Task Completion in 3D EnvironmentsAlexa Prize SimBot Challenge Proceedings, 2023